v3 API Development Update

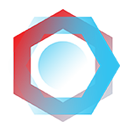

Today we're going to give you an update on the v3 API since our launch of the open beta from 10 days ago. We've been making some logical changes to how the risk score works, how we determine an IP is anonymous and we've also discovered and corrected some issues around certain detection types.

We've also deployed the API on some websites and services both run by us and in cooperation with some customers so we can discover and correct issues faster. It was a result of this that the above issues were found and fixed.

We mentioned above the risk scoring has been changed. We've also updated the API documentation to reflect this which you can view here.

Dashboard Statistics Performance Update

When we launched the new version of the Dashboard we did have a stretch-goal of improving the time it takes for the statistics you generate (by making queries) to show up in the Dashboard. We were not able to complete this before the launch so it was pushed back but I'm happy to say we launched it just under a week ago.

This did require a significant rewrite to how we collect and process statistics which is why we decided to push it back instead of rushing anything through that could potentially cause statistic losses or data corruption.

So the end result of this work has been that it's now possible to generate a query and have it appear within your Dashboard in only a few seconds. Now it's still unlikely to be that fast but we're now often seeing positive detection log entries and other statistics showing within 30 seconds as opposed to upto 8 minutes that we had seen in recent times.

The reasons that the statistics had begun to lag behind is four fold.

- We began to highly compress statistics to reduce loading times of data from disk to memory and to reduce storage use. This caused a high amount of CPU usage when altering statistics as it required reading data, decompressing it, altering it, compressing it and then saving the data back to disk. Although it was only a few milliseconds per account it adds up.

- We have gained a lot more customers since we last altered how statistics are handled, we've grown by more than 1000x. So there's just a lot more statistics to process.

- We had structured processing around 60 second intervals. Sometimes that meant the processing completed fast and there were many seconds where the processor did nothing allowing the next 60 second interval to accumulate a lot of extra data. Essentially this fixed sized processing window created inefficiencies.

- Data was only collected from our nodes once a minute so if you happened to generate a request on the very first second after a collection of data had taken place you would "miss the bus" so to speak further delaying your statistics by a minimum of 1 minute and often longer due to the above processing overhead.

So to solve these problems, first of all new fresh data is no longer compressed, it is instead compressed later by different processes. This single change decreased statistic processing time from a 75 second average to only 15 seconds. We also made the processor multithreaded on a per-account basis (to make sure there's no race conditions where two threads try to alter the same customers data at the same moment).

We also altered how the processing timer works, we no longer stick to a 60 second window, instead the processor runs on all the statistics there are and once finished, checks again for new stats to process and then processes those. Everything happens much more fluidly and by processing statistics near-instantly it stops a large queue from forming which also results in faster time to process what data there is.

The final thing we did is we altered the data collector to work similar to the processor. Each of our servers that need to present or collect data will now do so using events to indicate to each other when there is data ready to be collected. This is how we're now able to sometimes get to a sub-5 second query-to-log situation with the new code, the stats are both collected and processed more often.

Some of the work to make this possible was done with the introduction of the v3 API and how it structures data, we back-ported this to the v2 API prior to launch on the 19th of August, each piece of statistical data is now stored uniquely without data reads or alterations, this reduces I/O-based blocking where you want to write data to something that is currently already being read or written to. And since each piece of metadata you're generating is now saved uniquely for each request you make to the API it allows them to be processed near-instantly since we don't have to wait for a large amount of correlated requests to be batched together.

There is still more we can do here, we would love to get the time-to-log near-instant (1-3 seconds) and that may be achievable with further optimisations.

Scraper Services

Last year we made a blog post where we were taking aim at scraper services, we have built profiles for many of the popular ones in the space that offer scraping via residential proxy (and there are a lot of these). But we've been largely silent on our efforts on these services since then. We have over the past week added 10 new services with full provider data and we're actively looking to add more.

It is our goal to have added 100 new ones this year especially as we feel it's so important that we added a specific scraper detection type to the v3 API (and the v2 API also presents these under the type: field). We have seen over the past few years that these services have exploded in popularity as many AI LLM companies are attempting to scrape every webpage in existence and have found themselves foiled by services like our own that list their hosting providers. As a result they're turning to paid residential scraping services to scrape data and these usually go undetected.

This is why we're taking a much more active approach to these, indeed we're signing up and paying many of them just to get access to their fleets of residential addresses and honestly it's very expensive to do it this way but it's the only reliable method. For the time being we're using a phased approach targeting the least expensive ones first and seeing what kind of results that nets, already we've noticed a lot of the services are sourcing their proxies from the same place.

So that's the update for now!

We hope you're enjoying these blog posts where we detail what we're working on, we're very excited about the new API and thankful for all the feedback we've received so far, each piece we get inches us closer to a fully stable release. Thanks for reading and have a wonderful week!