![]()

As the service has grown in popularity we've hit up against many different technical limitations. One such issue was our original API code being single threaded which limited our server hardware choices to systems that contained very high frequency CPU's. This was a problem because the industry as a whole was moving towards many-core CPU's that have lower frequencies.

We overcame this problem by rewriting everything to be fully multithreaded and to take advantage of as much pre-computed data as possible. We were able to reduce average query latency from 350ms+ to below 10ms before network overhead and we further refined these methods with our v2 API which launched several years ago.

One of the other major points of contention has been database synchronising. We like to have all our nodes be in sync as much as possible and we've gone through many different iterations of our database system to accomplish performance and latency that exceeds the demands of our customers.

But as the growth of our service has continued we've seen the database demands increase exponentially. As we add more data to the API, better logging for our customers and as we gain many more customers the rate of database changes is in the hundreds of thousands per minute.

This is a lot of data to keep synchronised on so many servers and we don't want to use a singular central database because it would hurt our redundancy, latency and our commitment to customer privacy (data at rest is encrypted with nodes within specific regions needing to request keys at the time you make a request that requires access to your data within that physical region).

And that brings us to today. We reached a point last week where the changes to the database were simply too frequent to keep up and we had a synchronisation failure of customer statistics and ip attack history data. To this end we've been hard at work to build out a new system that can better handle these changes and we've deployed that today, we're hopeful it will provide us with breathing room until next year when we'll likely have to revisit this situation.

One of the other changes we've implemented is the usage of Solid State Drives (SSD's). Last year we made the decision that all new servers would be required to have either SATA or NVMe based SSD's and we currently have 5 of our 9 servers running completely on SSD's. It is our intention to migrate our preexisting Hard Disk Drive based servers to SSD's as we naturally rotate them out of service, this grants us huge response time improvements for all database operations including synchronisation.

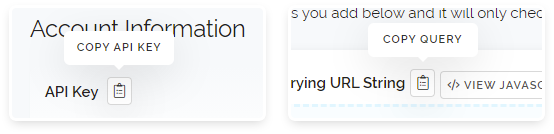

We also wanted to talk about account security and one of the ways we're looking to increase the security of your accounts. It has been brought to our attention that all the automated emails you receive from us contain your API Key and since these keys are important to your accounts that we should not display them so frequently.

So as of today we're now not going to include your API Key with automated mails except for when you signup or perform an account recovery. That means when you receive a payment notice or a query overage notice and all those other kinds of mails we will not be including your API Key with those.

Having the key displayed in these mails was mostly a holdover from a time when we didn't have passwords and account recovery systems and we didn't want users to lose their keys, including them on our mails was a way to guarantee they wouldn't be lost. Times have moved on significantly from then and we agree with our customers that have brought this up with us that it's time to no longer include them in the majority of our mails to you.

We hope everyone has had a great easter break and as always if you have any thoughts to share feel free to contact us, we love hearing from you :)

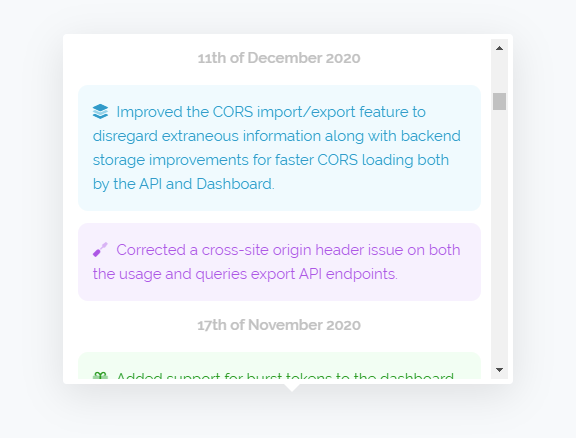

Above is the new changelog v2 interface which is now live across all our pages and as you can see it's colour coded. We now list new features in green, changes to existing features in blue and fixes in purple. We've also added some icons to the side which are more for fun than functionality. The new interface uses our updated interface guidelines that we've been adjusting the site to meet over the past several months.

Above is the new changelog v2 interface which is now live across all our pages and as you can see it's colour coded. We now list new features in green, changes to existing features in blue and fixes in purple. We've also added some icons to the side which are more for fun than functionality. The new interface uses our updated interface guidelines that we've been adjusting the site to meet over the past several months.