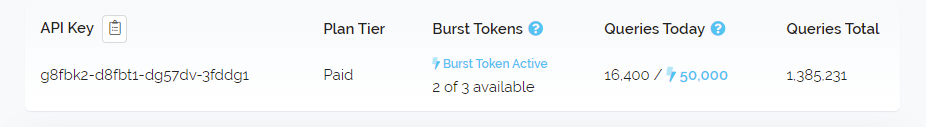

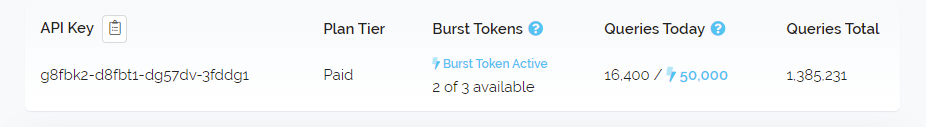

Earlier this month we made some enhancements to the dashboard UI to help you more easily identify if a burst token is currently in use and by how much the token is boosting your daily query allowance. Below is a screenshot showing how this looks when a token is active.

This feature is very important to us as it helps you to know if it's time to upgrade your plan or not. Making sure you understand how often your tokens are active and how your plan allowance is being affected helps you make an informed decision about your plan and hopefully save you money by staving off unneeded upgrades until they become necessary.

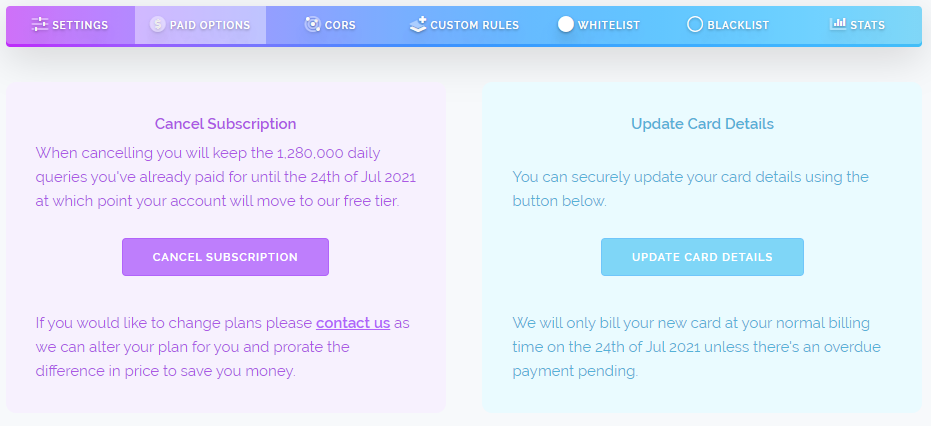

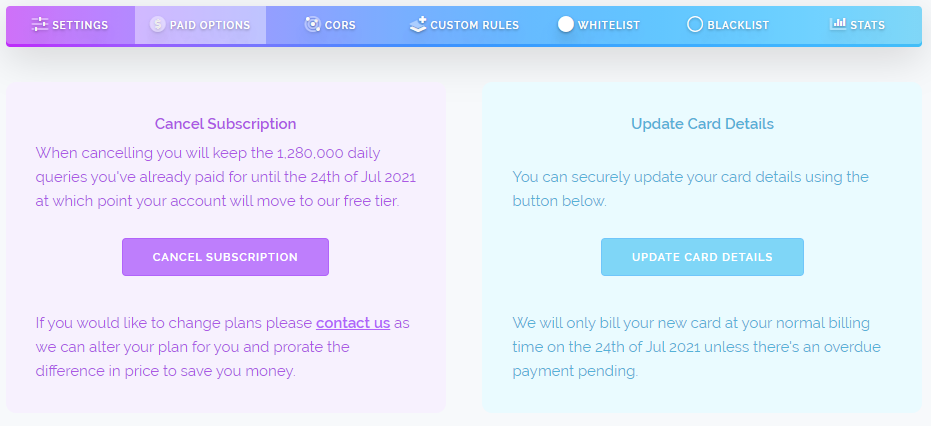

The other change we've made is on the paid options tab. We used to have a very large cancellation area on this tab which was so big that many customers didn't scroll down to find the other paid options such as updating your card details when your previous card expires, accessing invoices and looking at other paid plans they may want to consider upgrading or downgrading to.

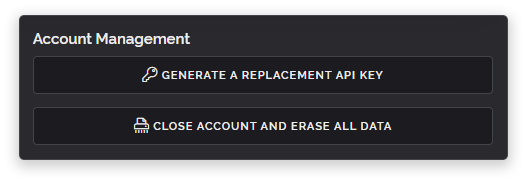

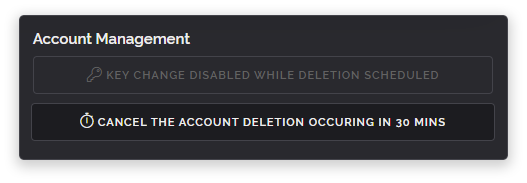

To fix this we've rewritten this section. A lot of the extraneous text has been removed and things have been kept more clear and concise. We always want you to be able to easily cancel a plan on your own without needing to talk to anybody, we strongly believe you should always be able to cancel something with an even greater ease than with how you signed up for it and we believe we're continuing to offer that as shown in the screenshot below.

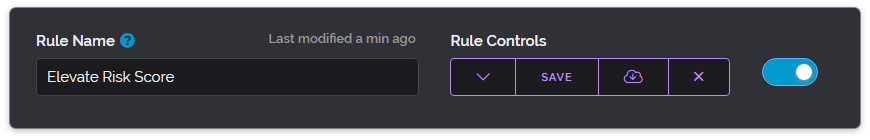

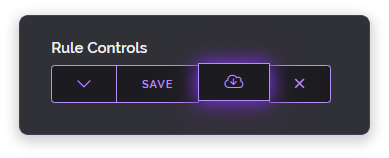

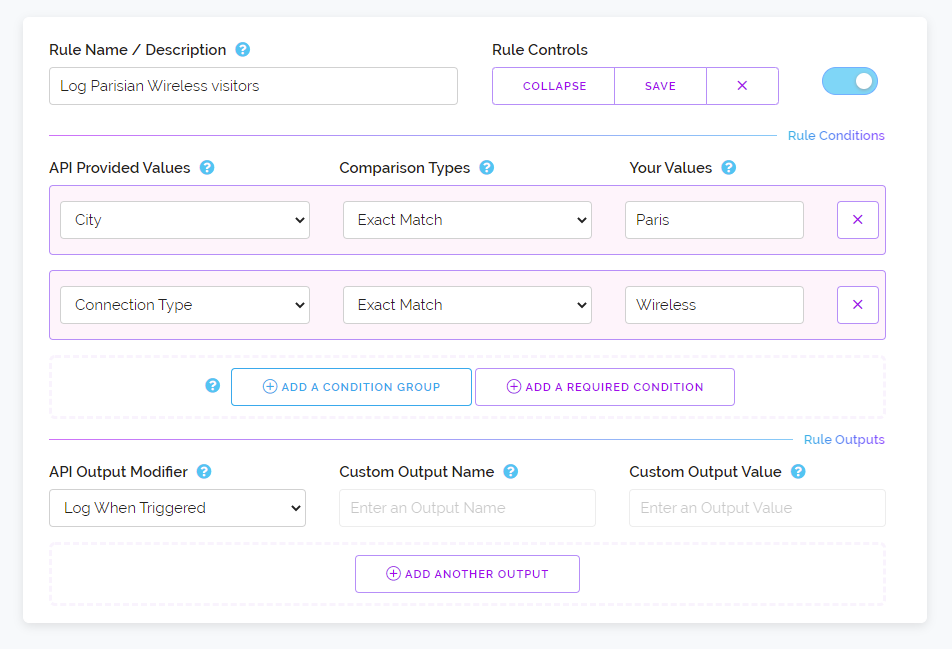

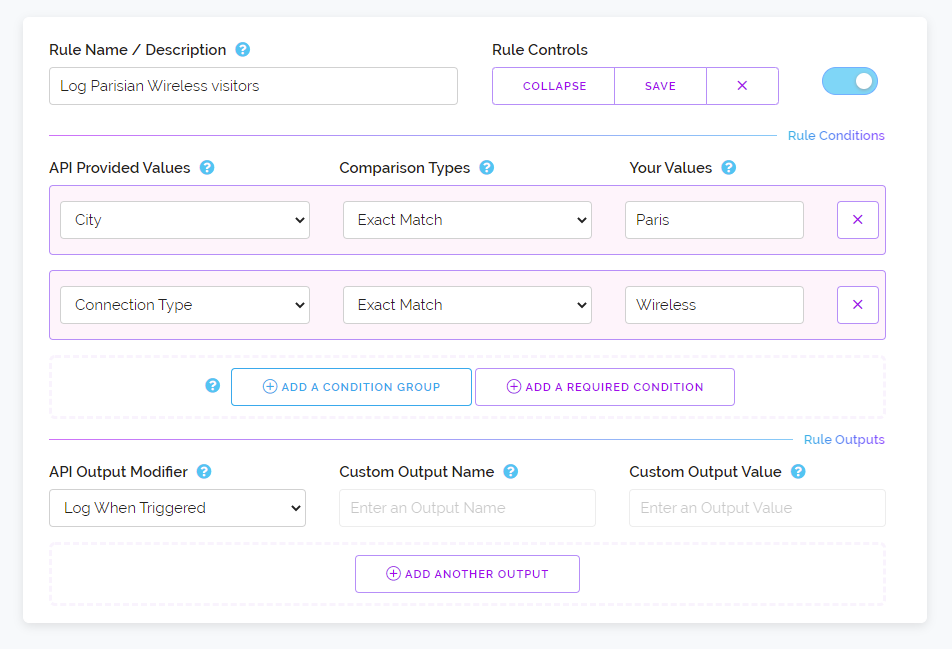

And finally we've added two new output modifiers to the custom rules feature. These allow you to forcibly log or forcibly disable logging for something that matches your conditions within a rule. This was a requested feature, prior to now we only ever logged positive detections made by our API or that were altered by your rule to become positive. For instance, changing a proxy: no result to a proxy: yes result.

But sometimes you want to log something that you don't want to be set as a proxy, VPN or other positive kind of detection. Sometimes you want to allow a visitor, not detect their address as something bad and still log them using our system instead of your own local database or analytical service.

As we've received a request for this feature and we think it could be useful to most customers we've added two modifiers which can force on or force off logging regardless of the detection type. These entries will appear in your positive log as type: rule entries.

Above is an example of how to setup such a rule to log connections from Paris that are Wireless in nature. You can add these logging outputs to any kind of rule even if you've already added other output modifiers like changing a connection type or modifying a risk score. This makes these new modifiers very flexible and can be added to any rule you've already previously created.

That's it for this update and we hope you're all having a great week.