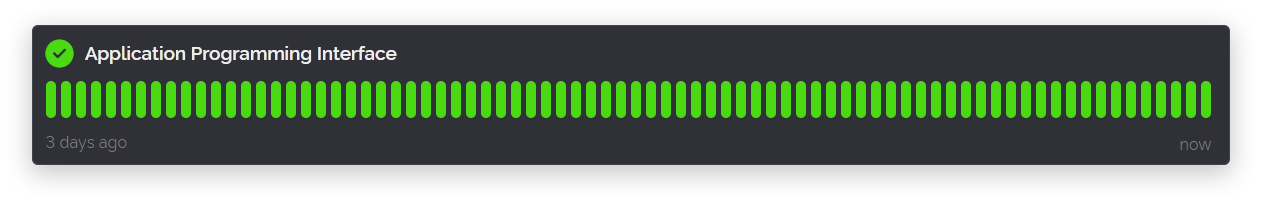

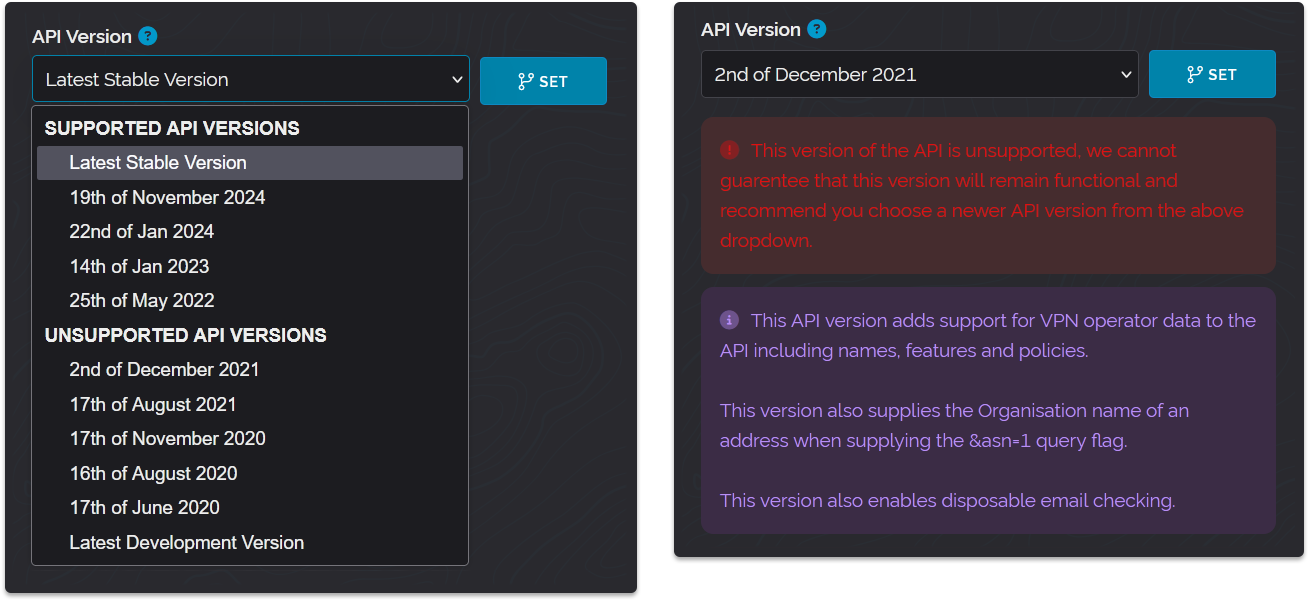

Today we've introduced a change to the API version selector found within customer dashboards. This change now clearly identifies which versions of our API are supported and which ones are no longer supported.

We've made this change for two reasons, firstly so that we can stop supporting older versions of the API which will free up more resources for us to put into newer versions of the API. And secondly so customers can have greater control over when to upgrade their API version to gain access to new features and upgrades.

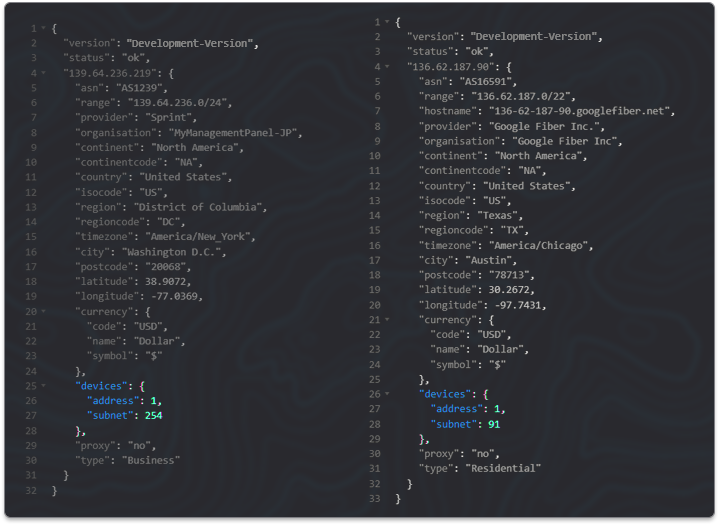

To expand on that second point, previously we've only ever issued new versions of the API when we make a change to the JSON output format that we believe could break implementations by our customers. But now we will be issuing new API versions when we make any kind of substantial code change (anything beyond maintenance or bug fixes), even if it doesn't alter the output format.

The reason for us wanting to release more API versions like this is to insulate our customers from API mistakes and errors as we introduce both new features and changes.

And so by giving our customers greater control over when to upgrade for even minor new features and changes they can be protected against bugs that we may have missed during development and testing. We felt the need for this as we introduced our new location engine last month which did have some problems at release that required us to temporarily roll back the update and then implement rapid fixes before redeployment.

We're very fortunate to have understanding and patient customers who made us aware of these location data regressions and allowed us time to fix the problems. While reflecting on their feedback we realised that bundling the new location data update into our previous release (which introduced device estimates) was not the right thing to do and we should have instead issued a new major version of the API alongside this feature.

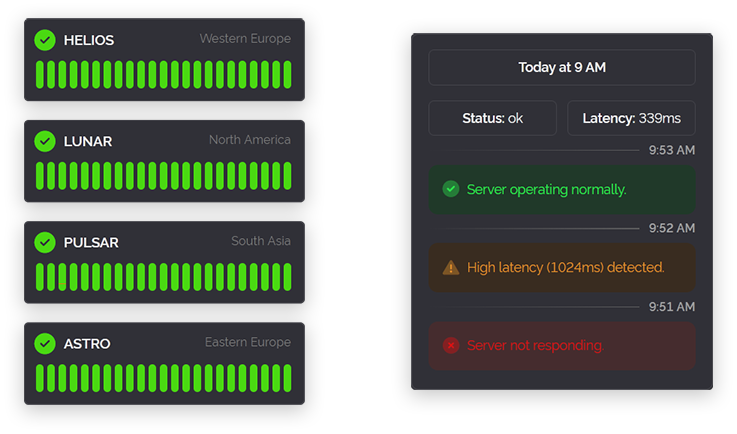

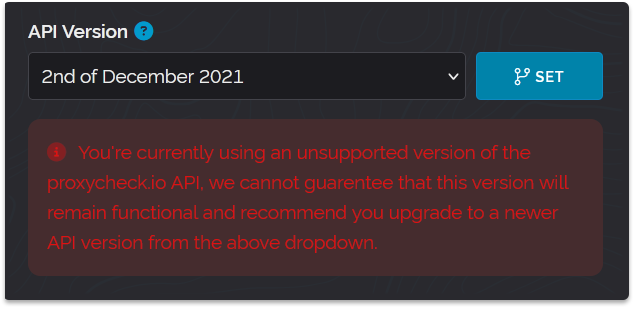

So above on the left is the new API version dropdown selector and on the right is what it looks like if you choose an unsupported version of the API. Below is what you'll see if you're already running on an unsupported version of the API.

We wanted to make sure these notices couldn't be easily missed thus the big red warnings. Our support timeline for API versions going forward will be a minimum of three years from the point of a release. Currently, we're supporting every version since May 2022 because that was when a large shift happened in the codebase with the introduction of Custom Lists. We will likely support versions beyond this date range depending on how time-intensive it is to port changes back.

So that's the update for today, as always thanks for reading and have a great week!