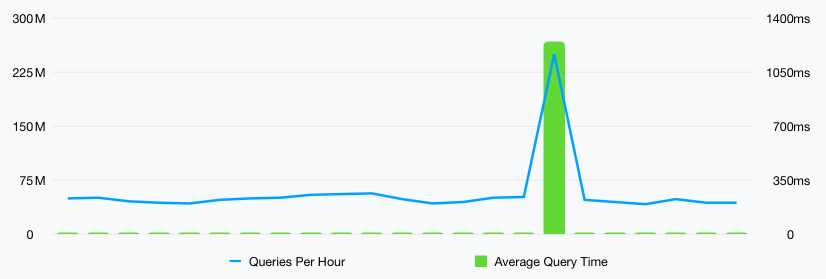

As the proxycheck service has become more popular we've found that our prior syncing system was not living upto expectations. High CPU usage due to the volume of statistics needing to be processed and synchronised was the main problem.

To combat this issue in the past we added update coalescing which is where our servers each maintain a local object which stores all the raw statistics for a period of 60 seconds and then all our servers transfer their objects to the server that has been selected to process statistics for that time period (the server selected to process statistics is regularly rotated but to maintain database coherency only one server can perform writes at any one time).

This had worked well for the past few years but as we've grown this method wasn't enough on its own to combat this issue. Where as at the beginning we could update your stats within 90 seconds of you making an API query what we've found lately is that some statistics can take up-to an hour to show depending on the load level of the node that originally accepted your API query.

This is quite obviously unacceptable which is why today we've gone through and rewritten the way all statistics are synchronised. We're still using our local object approach with update coalescing but we've completely reprogrammed the methods for sending data, chunking data, checksumming data and verifying data.

The biggest change this brings is a significant reduction in CPU usage. Synchronising statistics had become such a burden that some of our servers were spending upto 25% of their CPU utilisation on statistic syncing. With the new system we're seeing huge reductions in CPU usage with around only 5-6% usage when handling the synchronisation of live customer statistics and that usage is over much briefer time periods, stats are now synchronised within just 2 to 3 seconds where as before we were seeing even in the best case scenarios syncing taking more than several minutes.

This change is live right now which means you should see every stat in your dashboard (and on our dashboard API endpoints) update much quicker.

Thanks for reading and have a great weekend!