That title sure is a mouthful and I'm sure you're scratching your head right now just trying to decipher it! - Hopefully after reading this post you'll have an understanding of exactly what this new feature is. (The Custom Rule documentation has been updated here if you just want to read that instead).

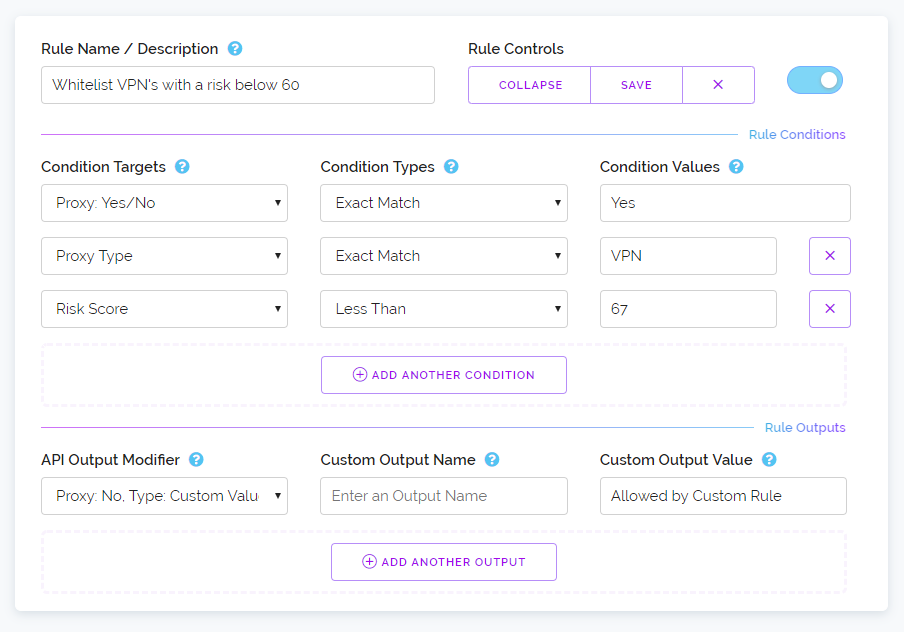

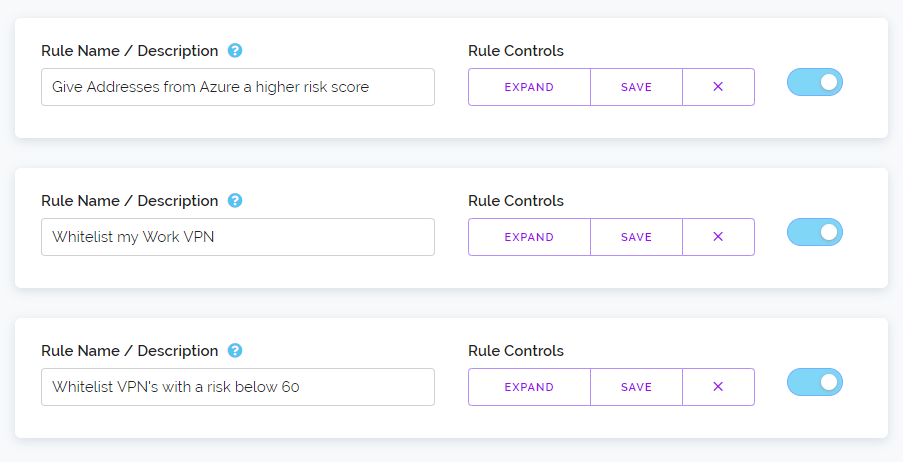

When we added the Custom Rules feature late last month we listed one of its main features as being able to customise the output of our API. Meaning you can provide some values that will be shown in the API result if your rule conditions are satisfied.

For example if you wanted to add an extra field to the API with some text you could do that. But we didn't at that time provide variables which is where you could include some data from the API in your customised fields. Today that changes with the introduction of variables. And we think we've accomplished this in a really simple to understand way.

Put simply we have a list of 14 variables such as %IP%, %COUNTRY%, %ASN% and %TAG% which you can include in your custom output value boxes when you create rules. When the API sees these variable names it will replace them with the data the variable name is for.

So if you use %COUNTRY% and check an IP for Spain that variable will be swapped out with the word Spain. Pretty simple right? - So why are we doing this. Well it's so you can further customise the output of our API to suit your needs and do some clever stuff.

For instance if you wanted to add content to a custom tag you've provided with a query you can do that by writing your new message and then including the %TAG% variable at the end. That will essentially add your custom information to the start of the tag because while you're overwriting the old tag you're also including a copy of it in the new tag.

So if you had a custom rule called "Block Russia" which always detects Russian IP's as proxies and your tags are all like this: "example.com/myblog/login.php" and you wanted to note that in the tag this IP was detected as a proxy because of a custom rule you could supply a custom value like this: "Activated by Rule: %RULENAME%, %TAG%" which would output: "Activated by Rule: Block Russia, example.com/myblog/login.php".

And that would then appear in your positive detection log within your dashboard on our website allowing you to very simply track when a rule was acted upon that resulted in a positive detection as opposed to an organic result that wasn't caused by your rule.

So you can see the power that variables provide and we know you'll find them useful as we're using the custom rules feature ourselves on our own personal websites, forums and blogs and much of the new functionality such as replacing tag content and adding output variables are a direct result of us using our own product. With that said we're still in full custom rule development so keep checking back for more updates!

Thanks for reading and have a great weekend.