On the 20th of April proxycheck.io reached its third year milestone. In that time we've served many billions of queries and added many features. To celebrate this occasion we decided to redo our API documentation so that it meets the level of quality that our service delivers. So let's go through some of the changes we've made.

- Each section of the API must be useful, less repeated information makes it faster to read.

- Anchor points throughout the document making it possible to link to specific sections.

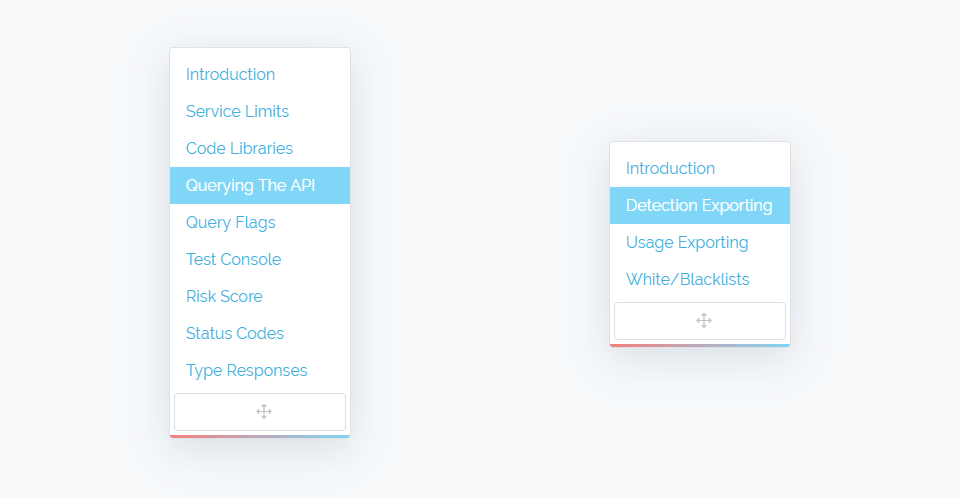

- A side menubar which lets you click straight to the section you're interested in and follows your progress through the document.

- A moveable menubar so you can position it wherever you'd like it to be.

- Straight forward tables showing feature, limit and other breakdowns with colour formatting.

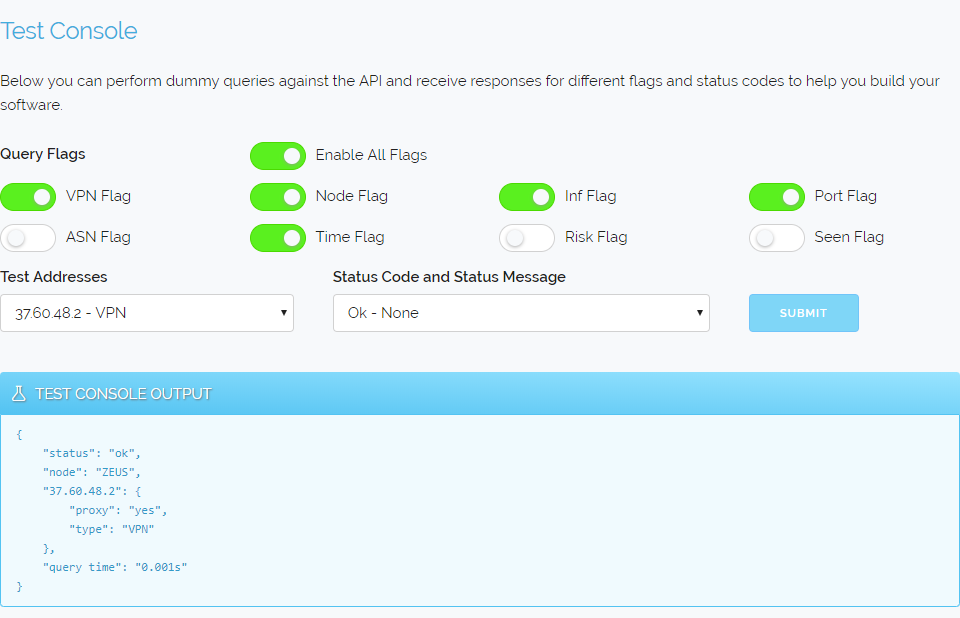

- A test console so you can try all the flags and status codes right in the document itself.

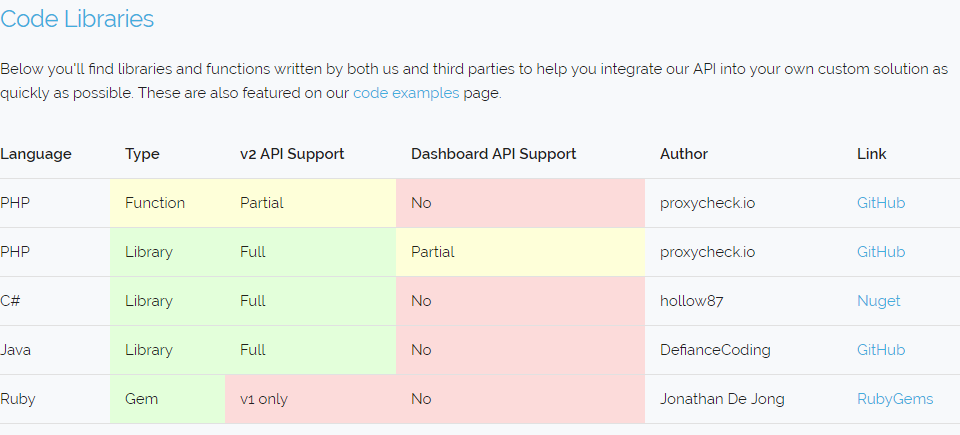

- Showcased coding libraries and their features so you don't waste time reinventing the wheel.

So lets get to the screenshots! - First we'd like to showcase our new side menus which not only track your position through the api documentation based on where your cursor is positioned but are also draggable to anywhere on the screen where they will stay. This is especially useful for users with small screens who may need the menus to overlay some of the document content.

The next thing we wanted to illustrate is our new library section where we feature many implementations of our API written by ourselves and other developers that you can use to jump start your own use of our API.

If you have created a function, class or library for our API that you would like us to feature in our documentation please contact us!

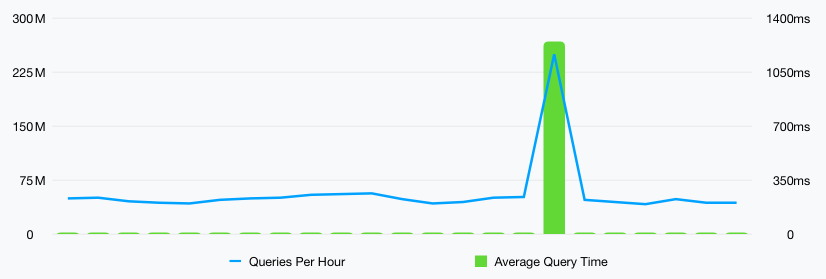

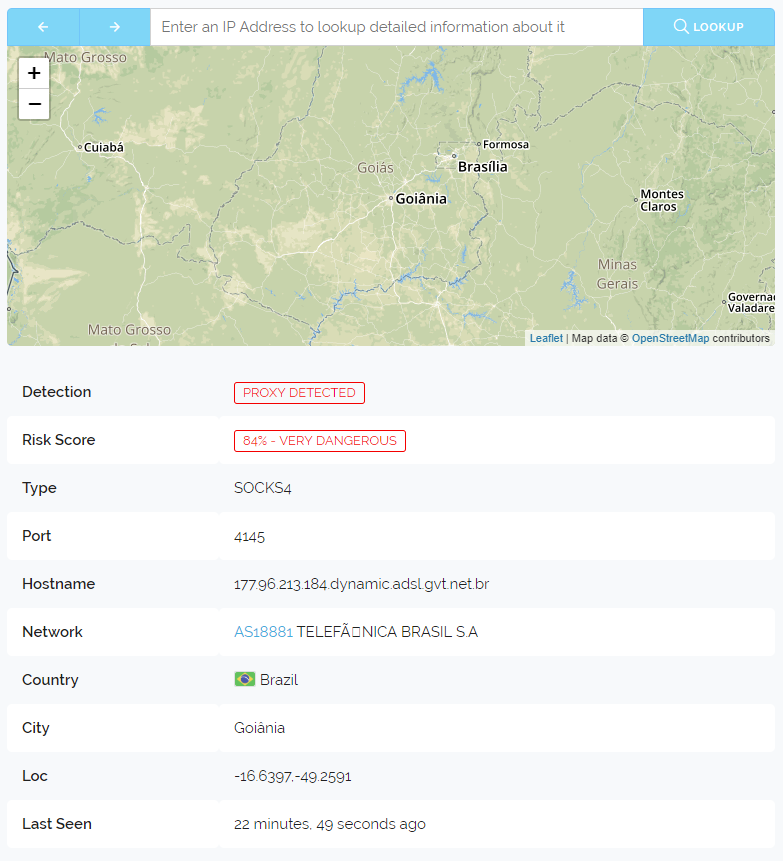

Now If you do decide to build your own client for our API then what better way is there to familiarise yourself with our formatting and status codes than to try them right in your browser. So we've included a new test console that lets you see every possible answer from our API with a high degree of customisability.

We hope that you'll find these new features highly useful when developing for our API. We know that the documentation has needed these changes for some time and we've been planning to overhaul them since the beginning of this year. We spoke with multiple customers during the design of our new documentation page and they were instrumental in recommending changes and additions.

If you have any feedback please contact us and let us know, we would very much like to hear from you. Thanks for reading and we hope everyone has a great week!