Today we have made two significant changes to our positive detection log which appears in your dashboard and both changes pertain to the tagging feature.

If you're unaware, the tag feature allows you to supply a small piece of text with each query you make for your own reference. We then display those "tags" back to you with the query in your dashboard if the query was detected as a Proxy Server, VPN Server or was Blacklisted by your own supplied blacklists within your dashboard.

The first change we've made is if you now supply the value 0 for your tag (aka &tag=0 in the URL) we will not save this specific query to your positive detection log even if it's a Proxy, VPN or a Blacklisted address. So essentially you can now turn off the positive log on a query-by-query basis.

This has been a feature requested by users who want complete privacy. By enabling this feature the IP's you test would never be held on our servers for more than a few minutes and not committed to any kind of log.

The second change is if your tag is blank, meaning you don't supply the tag query at all, we will only log this entry to your positive detection log if your storage use for positive detections is under 10MB for the current month.

So what that second change means is, if you tag your queries with a piece of text nothing changes for you, we will always log and save those for you regardless of how much storage it uses. But if you don't supply a tag of any kind we will not save them if you're using over 10MB of our storage for your positive detection log within the current month.

We've made this second change because sometimes users come under huge proxy based attacks. For example 5 to 10 million positive detections in a 24 hour period on a single account is not unheard of for us. Storing all of those positive detections can be burdensome as they take up GB's of storage space.

These changes are live across both our v1 and v2 API endpoints and the API Documentation page has been updated to reflect these changes as-well.

Thanks for reading and we hope everyone is having a great week.

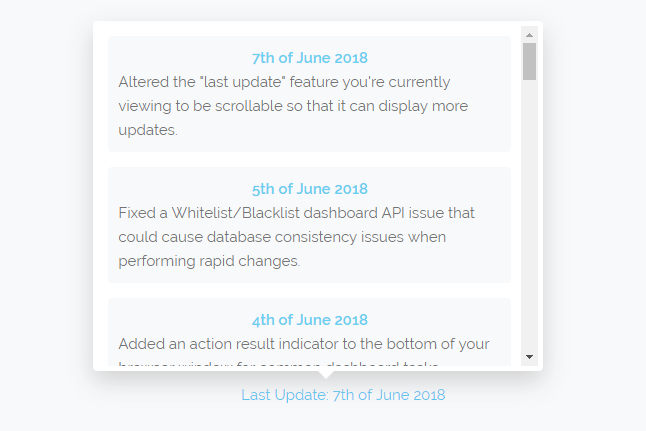

Above is a screenshot showing the Dashboard's last update window which now displays 23 updates going back to October last year.

Above is a screenshot showing the Dashboard's last update window which now displays 23 updates going back to October last year.