We know that choosing the right security partner can be difficult. There is a learning curve when integrating an API and you have to consider the costs associated with implementation and usage.

That's why we've been promoting third party plugins which integrate our API into your software, it removes some anxiety and makes implementation straightforward. One such plugin that we've been very fortunate to have made on our behalf for WordPress is called Proxy & VPN Blocker and it's made by an independent developer called Ricksterm.

It was released in December 2017 and has received regular and substantial updates since then. It was the first third-party plugin to integrate our v2 API and it's the only plugin we're aware of that can show your real-time query usage and positive detection log outside of our own customer dashboard.

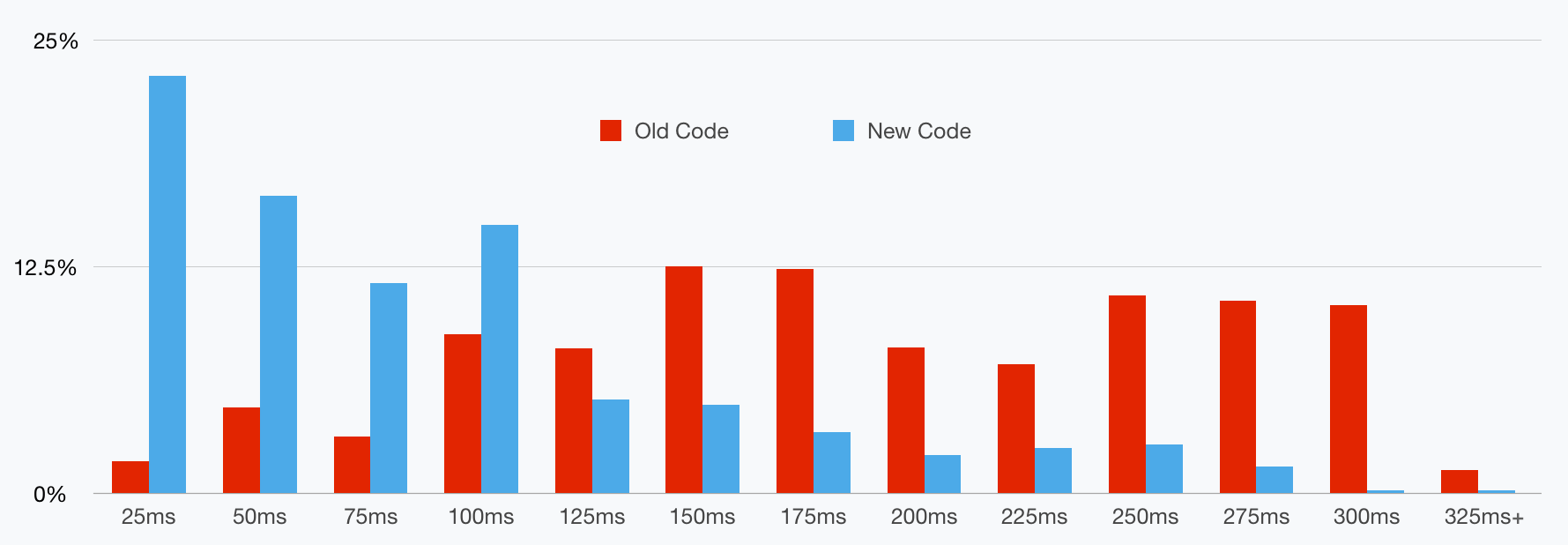

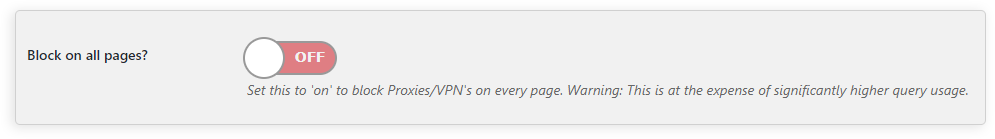

Recently it gained the ability to protect not just login, registration and comment submission pages but also your entire website if you so choose while caching API responses to save you money and decrease its impact on your webpage loading times. In its most recent update it even gained the ability to block countries.

With WordPress being used by an estimated 30% of websites we feel this plugin is very important and so we want to incentivise our customers to donate towards the plugins continued development. To that end in partnership with Ricksterm we're offering two promotions.

If you donate $15 to the plugin through the WordPress plugin page here you will be given our 10,000 queries a day package for a period of one year. Usually this package costs $19.10 when purchased annually or $23.88 when paid for monthly.

We're also offering another promotion for the next package up. If you donate $30 you'll receive our 20,000 query package for a year which usually costs $38.30 when purchased annually or $48.88 when paid for monthly.

We (proxycheck.io) will not receive any of the money you donate, it will all go to Ricksterm who develops the WordPress plugin, we're merely giving you a free gift for your donation to him.

When donating either $15 or $30 please supply your email address to Ricksterm through the donation note feature so that he can pass it onto us to give your account the query volumes specified above. And of course make sure you signed up to proxycheck.io first!

One last thing to note, you don't need to use the queries exclusively with the WordPress plugin, they are normal queries exactly the same as you would receive when making a purchase through our own website so you can use them in any way and with any plugin or self-created implementation of our API.

We hope many of you will take advantage of the promotions as they represent quite significant savings. We intend to continue offering them for as long as the WordPress plugin remains actively supported by the developer.

If you've made or intend to make a plugin at this quality level please let us know, we would love to feature your plugin on our website and perhaps even support you with a similar promotion so you too can earn for your contribution to our API.

Thanks!