Over the past couple of weeks we've been working on upgrading the v2 API in a few specific ways.

- Increasing result speed, lowering query latency for both single and multi-queries.

- Giving you more control over the resolution of your queries

- Giving you more information with your positive detection results

1. Increasing Speed

So to increase speed we've worked on this from a multitude of angles. Most of our queries are singular and so our goal was to dramatically decrease the time to process singular queries. Multi-checks are actually quite fast already due to our multi-stage cache priming that happens during the initial IP Address processing making subsequent address processing very fast.

So our goal has been, how do we get the same performance benefits that those primed caches deliver when we're only processing a single query. We think we've accomplished that by creating a new process on our servers within which we can place all of our proxy data in its most efficient data format.

We had already been using RAMDISK's to hold data but it was not held in its most efficient format and our RAMDISK had a lot of overhead for features we don't make use of. Our new custom process does away with all this and is designed specifically for our use case.

So what's the result of all this? Well in testing we've been able to reduce single query latency to around 1ms before network overhead. This is with VPN checks and the real-time inference engine turned off, both of which we'll be working to improve the performance of later.

We're still testing and benchmarking these changes but so far it looks promising that we will be able to change to the new code soon.

2. More Control

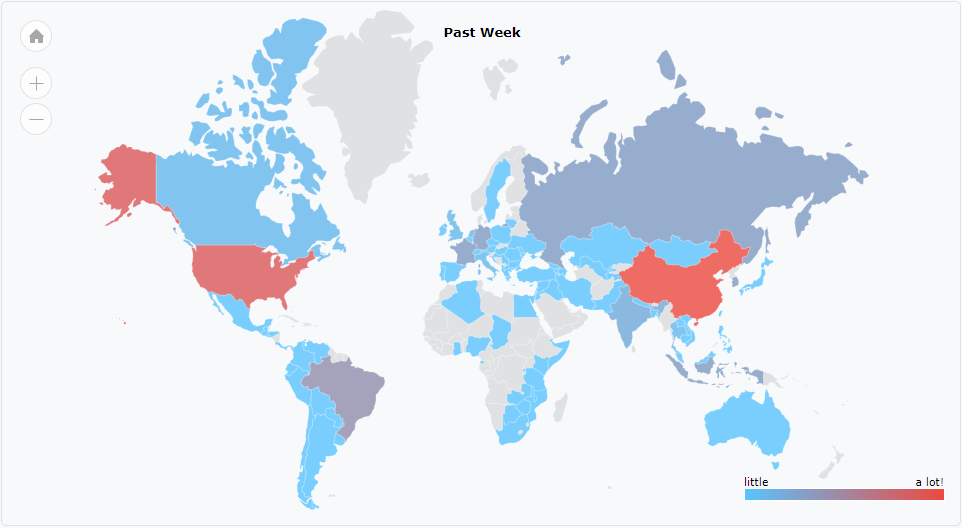

The second thing we've been working on is giving you more control over the resolution of your queries. What this means is, you can specify in days how close to the present time you want your results to be restricted to. For example perhaps only Proxies which have been seen as operating within the past 3 days as opposed to the past 7-15 days (the API's default maximum).

This new feature has been implemented as a flag called &day=# making it very easy to use. We're going to allow a resolution scale from 1 day to 60 days giving you great flexibility between very conservative detection which may miss some proxies and very liberal detection which may present some false positives.

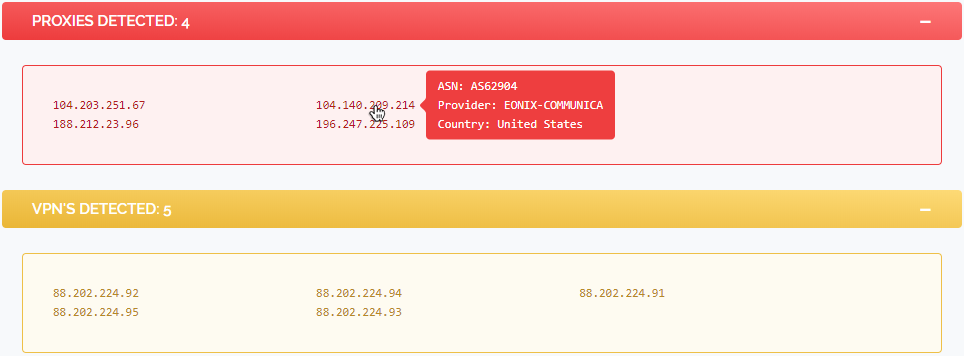

3. More information

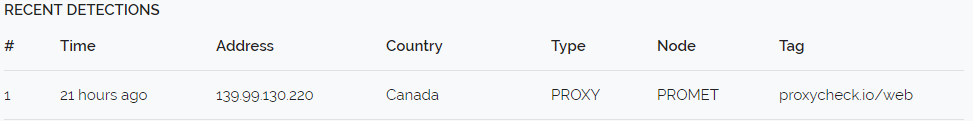

This is a combination of a few features. Firstly, because we're allowing you to specify the resolution in days of your detection results we're also going to give you the ability to see the last time we saw an IP Address operating as a proxy server. You'll be able to activate this feature by supplying the flag &seen=1 with your queries. We'll be displaying the result in both a human-readable "x time ago" format and a UNIX time stamp.

The other feature we're adding is the ability to view port numbers. This has been requested more times than we can count and it hasn't been something we've wanted to expose on the API because frankly it serves no security benefit but with how easy it is to scan an IP Address to discover running servers we've decided to implement the feature based on customer feedback. To activate this feature you'll be able to supply &port=1 as a query flag.

Below we've included a paste containing a query result provided by the new API version with both &seen=1 and &port=1 flags supplied.

{

"node": "PROMETHEUS",

"1.10.176.179": {

"proxy": "yes",

"type": "SOCKS",

"port": "8080",

"last seen human": "5 hours ago",

"last seen unix": "1520297212"

},

"query time": "0.001s"

}

When are these changes coming?

We're still validating the new API, the changes we've made essentially constitute a brain transplant, almost all the code that actually performs the checking associated with a query has been completely gutted and replaced with a new more efficient system based around our new on-server caching process.

It's our hope to have the new v2 API updated later this month and we may even back-port parts of it to v1 which the majority of our customers are still using. We're also going to be overhauling the API Documentation page as we've grown the flags available considerably with these changes necessitating a redesign to the page layout.

Thanks for reading, we hope everyone is excited to try the new API, we're certainly excited to get it finished for you all to enjoy.